Dealing with latency

Audio latency is primarily an issue for live recording, where the monitor-mix provided to artists must not lag their live performance by more than a few milliseconds, but it is desirable to keep latency below about 20 milliseconds even for mixing and other non-live work. During the presentation, three basic latency-management techniques were discussed, the first of which has already been implemented by the author, in the demo software on GitHub.

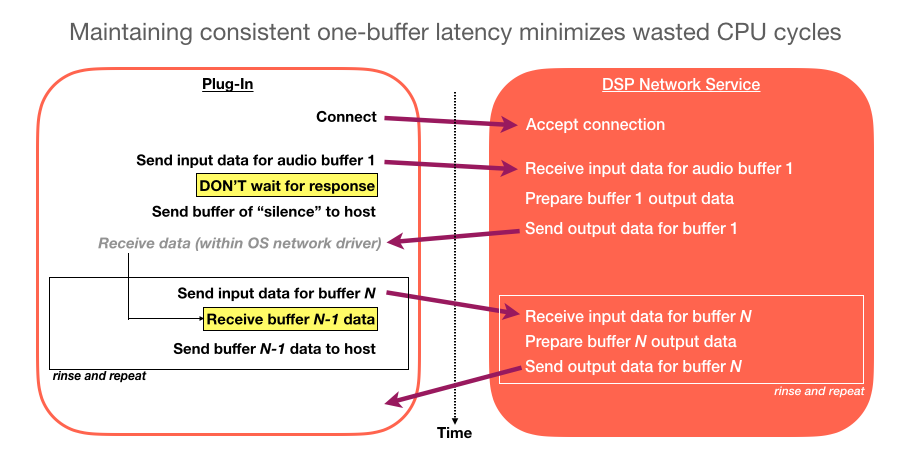

- The plug-in remains one buffer behind the remote DSP module, so the effective latency is always exactly one buffer-interval (determined by DAW settings, no more than 12 milliseconds).

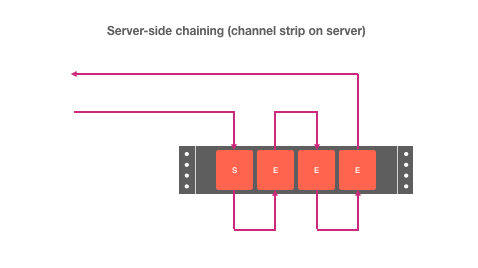

- Chaining connected DSP modules on the server-side avoids unnecessary round-trip network communication, avoiding the resultant compounding latency.

- Low-latency monitor mixes (for performer feedback during recording) can be computed on the server side, and fed back to the control room using standard digital audio connections (e.g. MADI or Audio-over-Ethernet).

A fourth programming technique is already implemented in the existing demo code, but was only mentioned on one of the author's supplementary presentation slides, reproduced here:

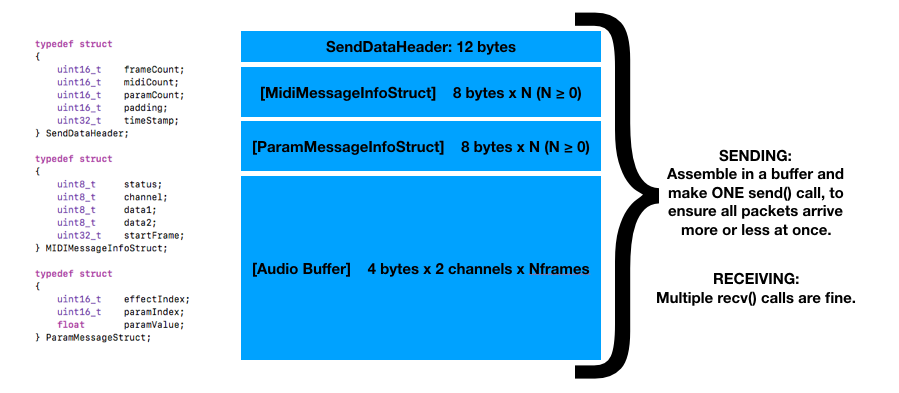

The message structure is discussed in more detail in context of standardization. Here our concern is the use of network-socket send() and recv() calls.

The protocol is designed using various headers, to facilitate incoming messages being read a chunk at a time. (Each header provides enough information to know the size of the next chunk.) Doing this with multiple recv() calls is perfectly OK, as long as you can be confident that the entire message has already been captured in the network driver's receive buffers. To ensure this, it's best to assemble the entire message in memory on the sending side, then issue a single send() call. The network driver on the sending side will almost certainly split the message into multiple packets (the usual upper limit for TCP/IP packets is only about 1500 bytes), but with a single send() call, these will be fired off in rapid succession and will normally arrive within milliseconds of each other.

Issuing multiple send() calls can cause longer inter-packet delays, leading to some recv() calls having to block and wait. This is manifested in high apparent CPU usage for the plug-in (due to blocking calls on the render thread) and, quite often, choppy audio.